Transpose convolution (also known as deconvolution) is a partial same functional with upsampling. GAN model

use it to construct the generative network.

Constructor

Based on whether TensorSpace Model load a pre-trained model before

initialization, configure Layer in different ways. Checkout Layer

Configuration documentation for more information about the basic configuration rules.

〔Case 1〕If TensorSpace Model has loaded a pre-trained model before

initialization,

there is no need to configure network model related parameters.

TSP.layers.Conv2dTranspose();〔Case 2〕If there is no pre-trained model before initialization, it is

required to configure network model related parameters.

[Method 1] Use filters, kernelSize

and strides

TSP.layers.Conv2dTranspose( { filters : Int, kernelSize: Int, strides: Int } );[Method 2] Use shape

TSP.layers.Conv2dTranspose( { shape : [ Int, Int, Int ] } );

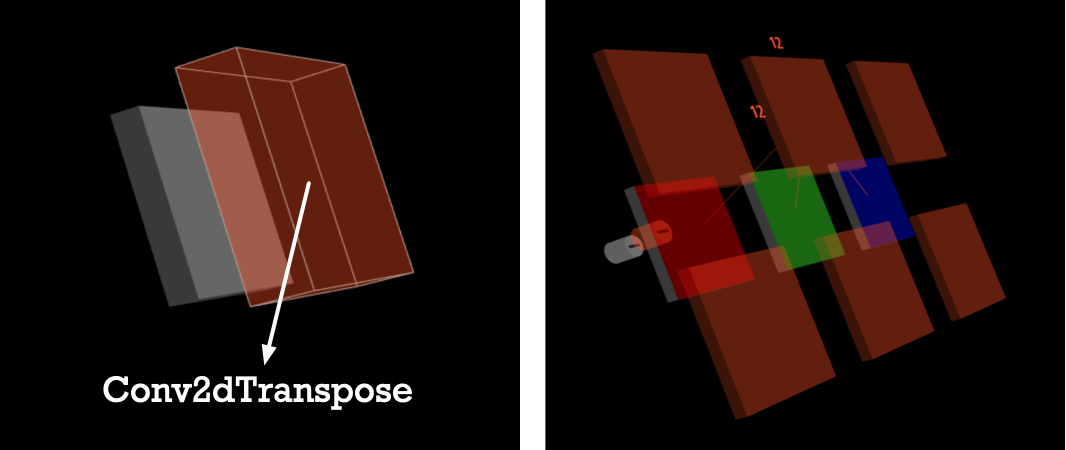

Fig. 1 - Conv2dTranspose layer close and open

Arguments

Name Tag |

Type |

Instruction |

Usage Notes and Examples |

|---|---|---|---|

|

name |

String | Name of the layer | For example, name: "layerName" In Sequential Model: Highly recommend to add a name attribute to make it easier to get Layer object from model. In Functional Model: It is required to configure name attribute for TensorSpace Layer, and the name should be the same as the name of corresponding Layer in pre-trained model. |

|

filters |

Int | Amount of filters, Network Model Related |

filters: 16 |

|

padding |

String | Padding mode, Network Model Related |

valid[default]: Without padding. It drops the right-most columns (or bottom-most rows). same: With padding. Output size is the same as input size. |

|

kernelSize |

Int | Int[] | The dimension of the convolution window, Network Model Related |

The 2d convolutional window. For example, Square kernel, configre kernelSize to be 3 Rectangle kernel, configure kernelSize to [1, 7]. |

|

strides |

Int | Int[] | The strides of the convolution, Network Model Related |

Strides in both dimensions, [default] Automatic inference strides: [1, 1]. For example, Strides in width and height is same, configure strides to be 2 Strides in width and height is different, configure strides to be [1, 2] |

|

shape |

Int[] | Output shape, Network Model Related |

For example, shape: [ 28, 28, 6 ] means the output is 3-dimensional, 6 feature maps and each one is 28 by 28. Data format is channel last. |

|

color |

Color Format | Color of layer | Conv2dTranspose's default color is #FF5722 |

|

closeButton |

Dict | Close button appearance control dict. More about close button | display: Bool. true[default] Show button, false Hide button ratio: Int. Times to close button's normal size, default is 1, for example, set ratio to be 2, close button will become twice the normal size |

|

initStatus |

String | Layer initial status. Open or Close. More about Layer initial Status | close[default]: Closed at beginning, open: Open at beginning |

|

animeTime |

Int | The speed of open and close animation | For example, animeTime: 2000 means the animation time will last 2 seconds. Note: Configure animeTime in a specific layer will override model's animeTime configuration. |

Properties

.inputShape : Int[]

filter_center_focusThe shape of input tensor, for

example inputShape = [ 28, 28, 3 ] represents 3 feature maps and each

one is 28 by 28.

filter_center_focusAfter model.init()

data is

available, otherwise is undefined.

.outputShape : Int[]

filter_center_focusThe shape of output tensor is

3-dimensional. 3️⃣

filter_center_focusdataFormat is channel last. for

example outputShape = [ 32, 32, 4 ] represents the output through this

layer has 4 feature maps and each one is 32 by 32

filter_center_focusAfter model.init()

data is

available, otherwise is undefined.

.neuralValue : Float[]

filter_center_focusThe intermediate raw data after this

layer.

filter_center_focusAfter load and model.predict() data

is available, otherwise is undefined.

.name : String

filter_center_focusThe customized name of this layer.

filter_center_focusOnce created, you can get it.

.layerType : String

filter_center_focusType of this layer, return a

constant: string Conv2dTranspose.

filter_center_focusOnce created, you can get it.

Methods

.apply( previous_layer ) : void

filter_center_focusLink this layer to previous layer.

filter_center_focusThis method can be use to construct

topology in Functional Model.

filter_center_focus See Construct Topology for more details.

.openLayer() : void

filter_center_focus Open Layer, if layer is already in

"open" status, the layer will keep open.

filter_center_focus See Layer Status for more details.

.closeLayer() : void

filter_center_focus Close Layer, if layer is already in

"close" status, the layer will keep close.

filter_center_focus See Layer Status for more details.

Examples

filter_center_focus If TensorSpace Model load a

pre-trained model before initialization, there is no need to configure network model related parameters.

let transposeLayer = new TSP.layers.Conv2dTranspose( {

// Recommend Configuration. Required for TensorSpace Functional Model.

name: "conv2dTranspose1",

// Optional Configuration.

animeTime: 4000,

initStatus: "open"

} );filter_center_focus If there is no pre-trained model

before initialization, it is required to configure network model related parameters.

let transposeLayer = new TSP.layers.Conv2dTranspose( {

// Required network model related Configuration.

kernelSize: 5,

filters: 6,

strides: 1,

// Recommend Configuration. Required for TensorSpace Functional Model.

name: "conv2dTranspose2",

// Optional Configuration.

animeTime: 4000,

initStatus: "open"

} );Use Case

When you add de-convolution layer with Keras | TensorFlow | tfjs in your model the corresponding API is

Conv2dTranspose in TensorSpace.

| Framework | Documentation |

|---|---|

| Keras | keras.layers.Conv2DTranspose(filters, kernel_size, strides=(1, 1)) |

| TensorFlow | tf.nn.conv2d_transpose(value, filter, output_shape, strides) |

| TensorFlow.js | tf.layers.conv2dTranspose (config) |

Source Code